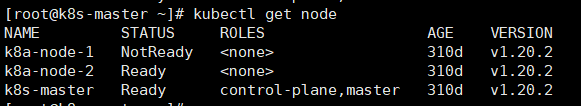

故障发现

1 | kubectl get node |

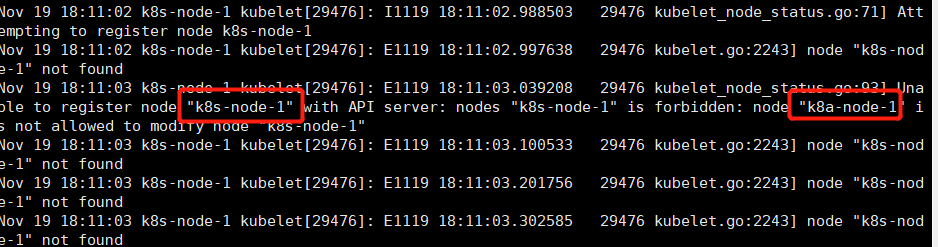

故障分析

1 | a. 节点状态查询 |

故障处理思路

1 | a. 将对应节点打污点,驱逐其上pod资源 |

故障处理

- 驱逐节点资源

1 | # master上操作 |

删除节点

1

2

3# master上操作

[root@k8s-master ~]# kubectl delete node k8a-node-1

node "k8a-node-1" deleted重置节点

1 | # 对应node节点上操作 |

- 重新获取join token

1 | # master上执行 |

- 节点重新加入集群

1 | # 对应node上操作 |

验证

1 | [root@k8s-master ~]# kubectl get node |